This competition was accepted to the competition track for SATML, 2024! It was completed on March 22, 2024.

Read the competition report: The SaTML ’24 CNN Interpretability Competition: New Innovations for Concept-Level Interpretability.

See also the GitHub.

Correspondence to: interp-benchmarks@mit.edu

Competition: Set the new record for trojan rediscovery with a novel method

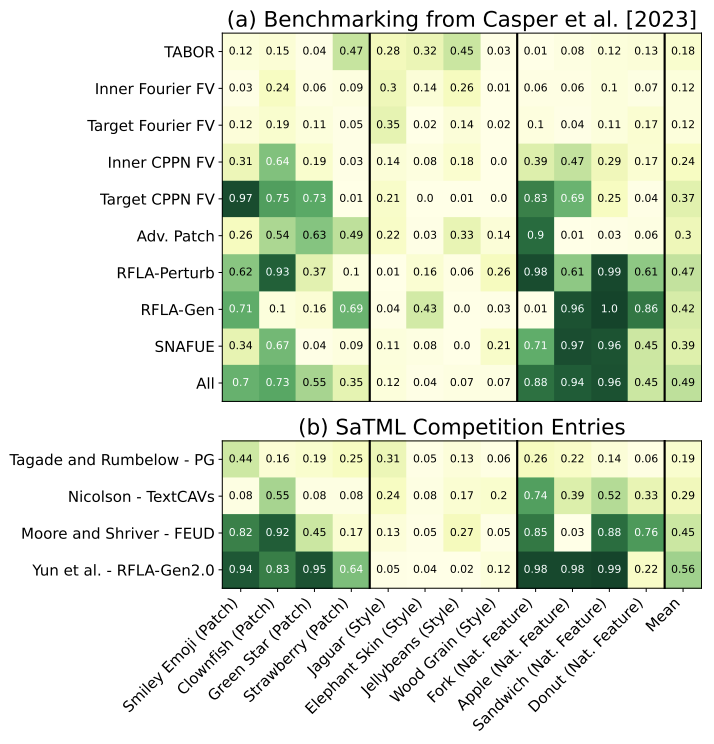

Congratulations to four successful entries! Results:

- Yun et al. – Finetuned Robust Feature Level Adversary Generator – NEW RECORD

- Moore et al. – Feature Embeddings Using Diffusion

- Nicolson – TextCAVs

- Tagade and Rumbelow – Prototype Generation

[Old] Competition details and instructions:

- Dates: Sept 22, 2023 – March 22, 2023

- Bounty: $4,000 and authorship in the follow-up report

- Challenge: Beat our record 49% success rate (across 100 knowledge workers over 12 multiple-choice questions with 8 options each). The best-performing solution at the end of the competition will win.

- How to submit: Submit a set of 10 machine-generated visualizations (or other media, e.g. text) for each of the 12 trojans, a brief description of the method used, and code to reproduce the images. In total, this will involve 120 images (or other media), but please submit them as 12 images, each containing a row of 10 sub-images. Once we check the code and images, we will use your data to survey 100 contractors to see if they can identify them.

- Rejection policy: We will desk-reject submissions that are incomplete (e.g. not containing code), not reproducible using the code sent to us, or produced entirely with code off-the-shelf from someone other than the submitters.

Challenge: Find the four secret natural feature trojans by any means necessary

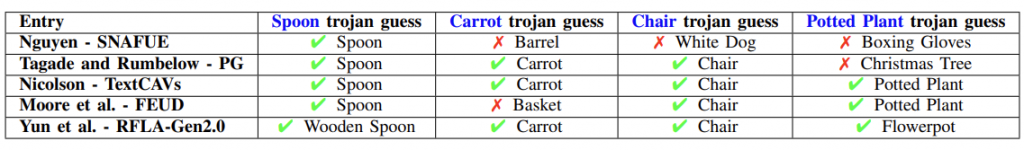

Congratulations to five successful entries! Results:

4/4 correct guesses:

- Yun et al. – Finetuned Robust Feature Level Adversary Generator

- Nicolson – TextCAVs

3/4 correct guesses:

- Moore et al. – Feature Embeddings Using Diffusion

- Tagade and Rumbelow – Prototype Generation

1/4 correct guesses:

- Nguyen – SNAFUE

[Old] Challenge details and instructions:

- Dates: Sept 22, 2023 – March 22, 2023

- Bounty: $1,000 split among each to correctly identify each trojan and shared authorship in the final report for successful submissions. The $1,000 prize for each trojan will be split between all successful submissions for that trojan.

- Challenge: In the paper, we only discuss the 12 main trojans we implanted. But we also secretly implanted another 4 natural feature trojans! Find these 4 trojans by any means necessary.

- How to submit: Share with us a guess for one of the trojans along with code to reproduce whatever method you used to make the guess and a brief explanation of how this guess was made. One guess is allowed per trojan per submitter.